About The Project

RAVEN (Real-time Adaptive Virtual-Twin Environment for Next-Generation Robotics in Virtual Production) makes Unreal Engine 5 (UE5) the place where robotic crew are trained, validated, and deployed. The system embeds a live digital twin of the stage in UE5 and connects it to the Robot Operating System 2 (ROS 2), so a humanoid robot can perform camera operation (and later lighting/FX) with frame-accurate timing, low latency, and predictive safety.

Why now: LED/XR stages deliver real-time pixels, but the physical layer (camera placement, lighting pose, practical FX) still relies on manual rigging and rehearsals that slow iteration and add safety overhead. RAVEN closes this gap by giving UE5 direct influence over robot motion and stage devices, so directors/DPs can author, preview, and replay robotic camera moves entirely inside UE5, with visible safety zones and timing aligned to LED scanout and camera shutter (pose-at-display-time).

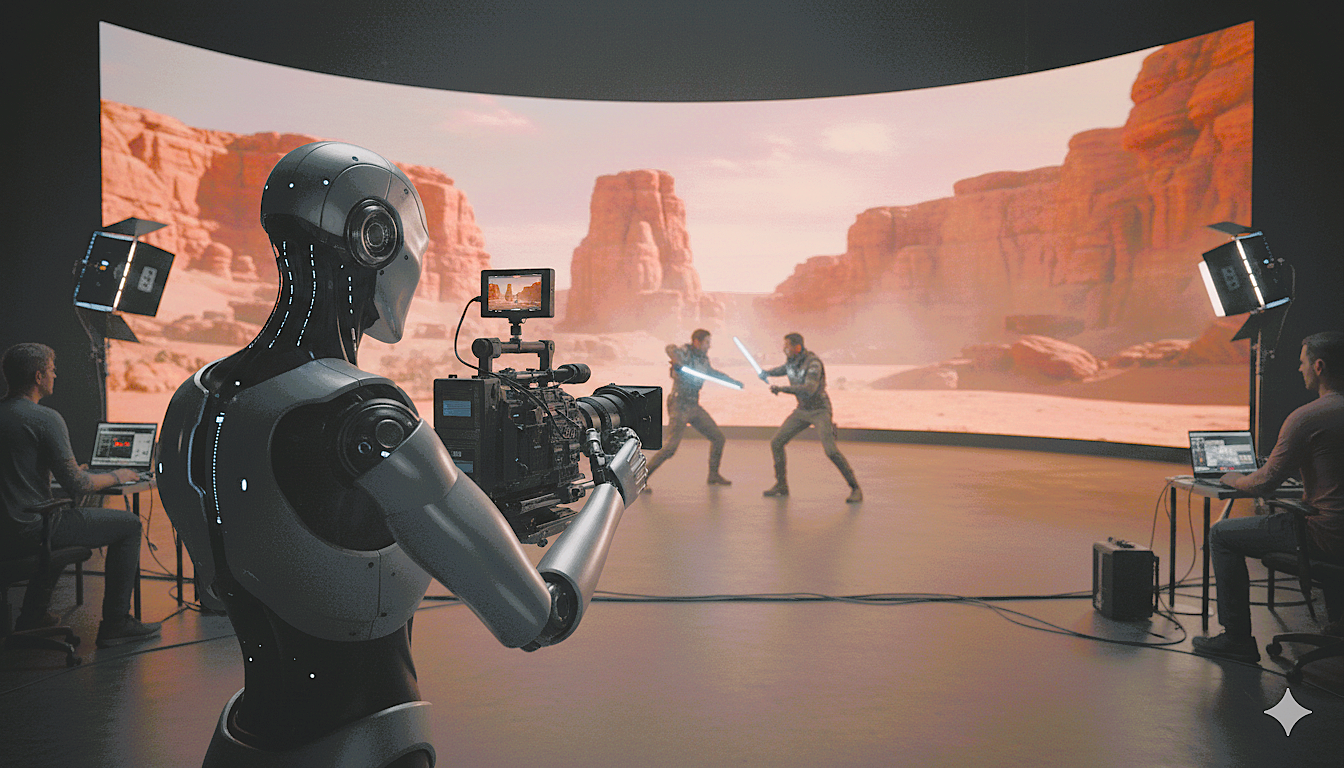

RAVEN Concept - (Imaged generated by Nano Banana)

Key Features

- An UE5 to ROS 2 bridge with QoS-tuned streams and PTP time-sync.

- A cinematography-aware control stack (model predictive control + learned policies) that respects framing, horizon, and continuity.

- Predictive Speed-and-Separation Monitoring (SSM) and keep-out volumes rendered in UE5 for human-robot safety.

- XR authoring/oversight tools (e.g., Meta Quest) so creatives can set paths, scrub takes, and validate safety before execution.

- Extensibility: a companion workstream, SCAR (Studio-Centric Autonomous Robot), coordinates ceiling-mounted lighting/FX on the same timeline and twin, enabling co-authored camera + lighting cues.

Outcomes

- Performance: Command-to-camera-pose latency of ≤120 ms (p95) and pose-to-render latency of ≤50 ms (p95).

- Safety: Safe-stop activation in ≤80 ms upon a Speed-and-Separation Monitoring (SSM) breach.

- Efficiency: A 30–50% reduction in setup and re-take time for complex motion shots.

- Reusability: The creation of reusable “shot profiles” for common cinematographic maneuvers.

- Deliverables: The project will deliver UE5/ROS 2 packages, a sample project, comprehensive documentation, and public benchmarks to validate the system's performance.

Our Team

Contact

For any enquiries, please reach out to us at raven.modie@gmail.com / xiaotian.dai@york.ac.uk.